FindIt

Designing an easily accessible support system to combat harassment in competitive gaming

Role

UX Researcher, UX Designer

Project Duration

Jan. 2023 - Mar. 2023

Design Challenge

In the fall of 2022, OpenIDEO hosted a design challenge on digital thriving, with co-sponsors including Riot Games, FairPlay Alliance, Sesame Workshop, and IDEO. Our Informatics class at the University of California Irvine, led by Dr. Layne Jackson Hubbard, used OpenIDEO's design question as our inspiration for UX research & design:

“How might we design healthy, inclusive digital spaces that enable individuals and communities to thrive?”

Preliminary Research - Our Design Space

Defined by the Fair Play Alliance, a contributor to the design challenge, digital thriving is "the outcome of online spaces intentionally designed to foster combined feelings of well-being, accomplishment, belonging, and meaningful relationships in individuals, groups, and communities".

Narrowing down our design space, our team found common ground in the fact that we were gamers experienced with competitive video games such as League of Legends and Call of Duty. Thus, we decided to work in the competitive gaming design space.

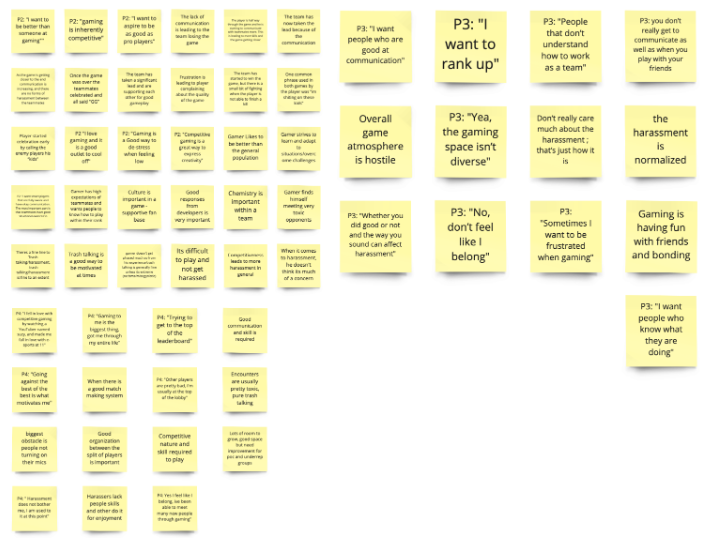

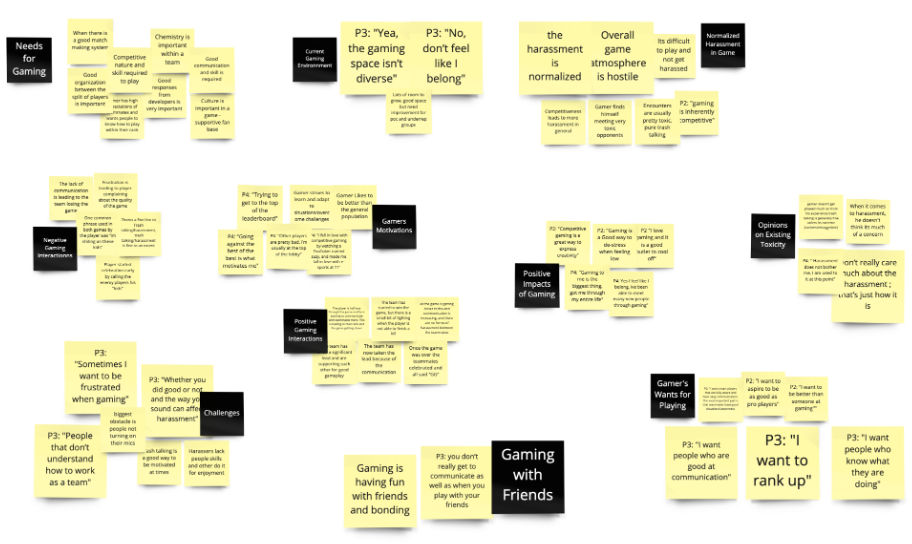

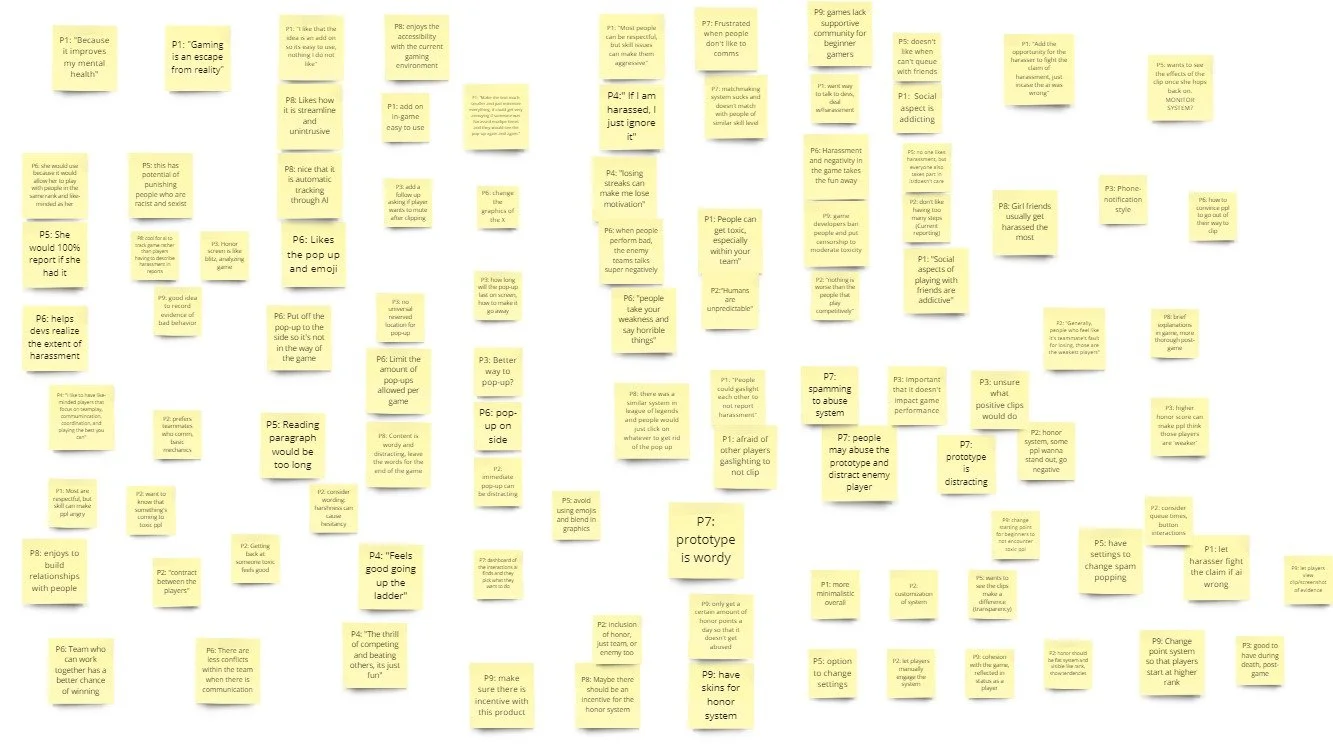

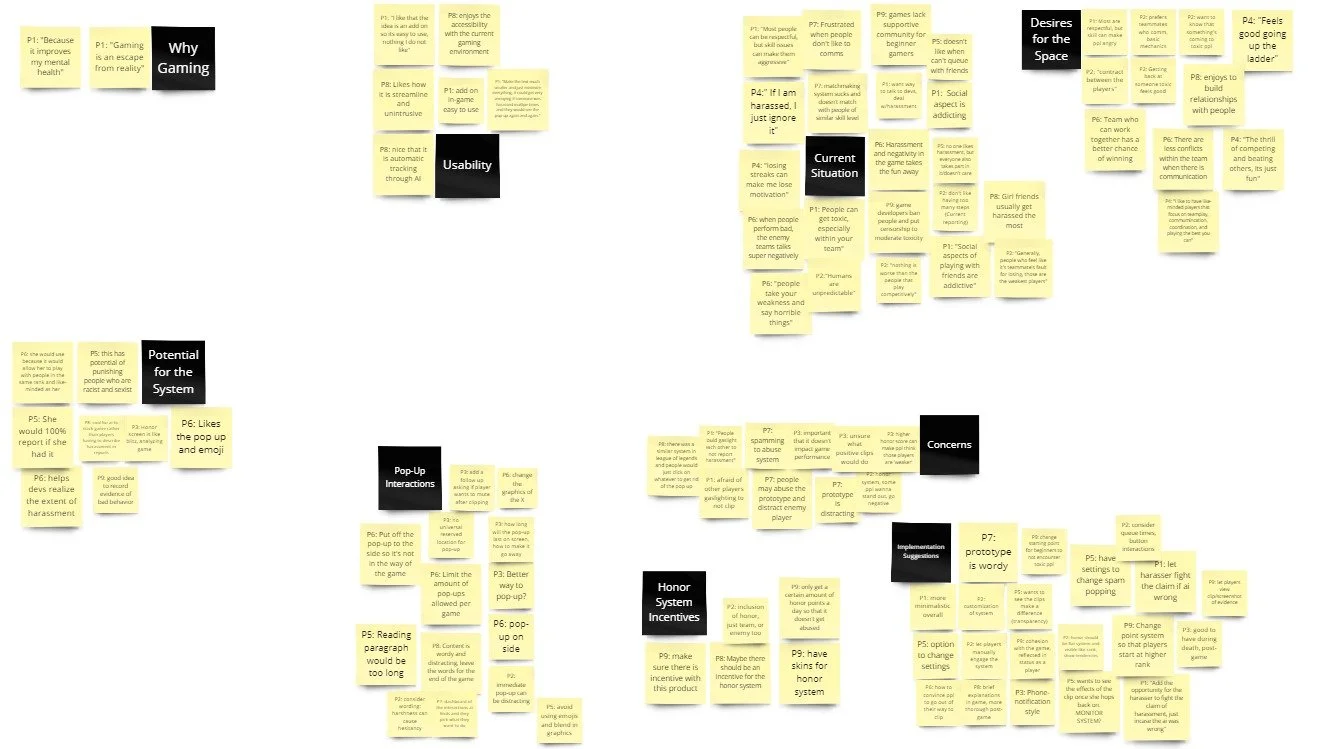

To better understand the space, our team conducted 7 pilot user observations and semi-structured interviews with gamers in the competitive gaming space. We then performed thematic analysis using affinity diagramming to organize our data and determine key insights.

Based on the observations and interviews performed, we found general thoughts amongst participants to be that there’s primarily a heavy lack of respectful behavior, which in turn would make participants just expect to see some form of toxicity or harassment in their games. Thus, there’s a desire for creating a more inclusive community, that reinforces more positive behavior in a game.

The current competitive gaming space perpetuates an endless degree of toxicity and harassment to its community due to harmful normalized behaviors that are ‘expected’ of the playerbase.

User Research: The Players

Because a major takeaway from our preliminary research was that toxicity was essentially normalized, we decided to take this approach in target audience:

Beginner Competitive Gamers. If players are the source of normalized toxicity in current games, then what we will do is design for the future: educate and prevent incoming players from existing harm.

In conjunction with these beginner gamers, our team recognizes that we need to also understand everyone that will be involved within the competitive gaming space. Another important audience to keep track of would be veteran gamers who will interact with beginners and factor into their gaming experience. These players are key in understanding existing toxicity, and how it can affect new players or how to keep that toxicity away from them. An indirect stakeholder will be game developers, who are responsible for the creation of these games and shifting game norms and interactions.

To understand our stakeholders' pain points and needs, we conducted naturalistic user observations and semi-structured interviews with 10 participants that matched one of these types of stakeholders. I conducted 2 of the 10 observations and interviews with one veteran gamer and one beginner gamer. Once again, I worked with my team to conduct thematic analysis using affinity diagramming.

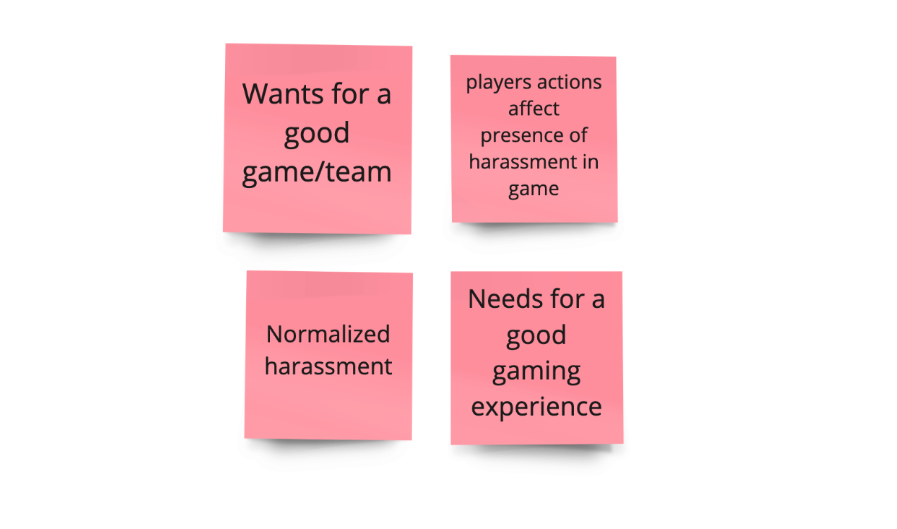

Based on the research I conducted with my team, we came to recognize primary pain points that our participants shared with us:

Normalized harassment in their games

Player actions that contribute to harassment, and overall game atmosphere

Competitiveness tends to lead to harassment

And, with these pain points in mind, our team identified these needs/desires from participants:

A good game session and/or team

Climbing up the ranks

Good chemistry with teammates

Like-minded players

Players, both beginner and veterans alike, were found to share an honestly simple desire: to have an enjoyable gaming session, which is determined in part by those they play with. However, normalized toxicity & harassment presents itself as a blocker to this desire.

Placing our focus on beginners in competitive gaming, we posed this as our design question:

“How might we enable new competitive team-based gamers to transition and deal with harassment in current competitive team-based gaming spaces, so that we may create healthier and more competitive gaming communities?”

Designing a Solution

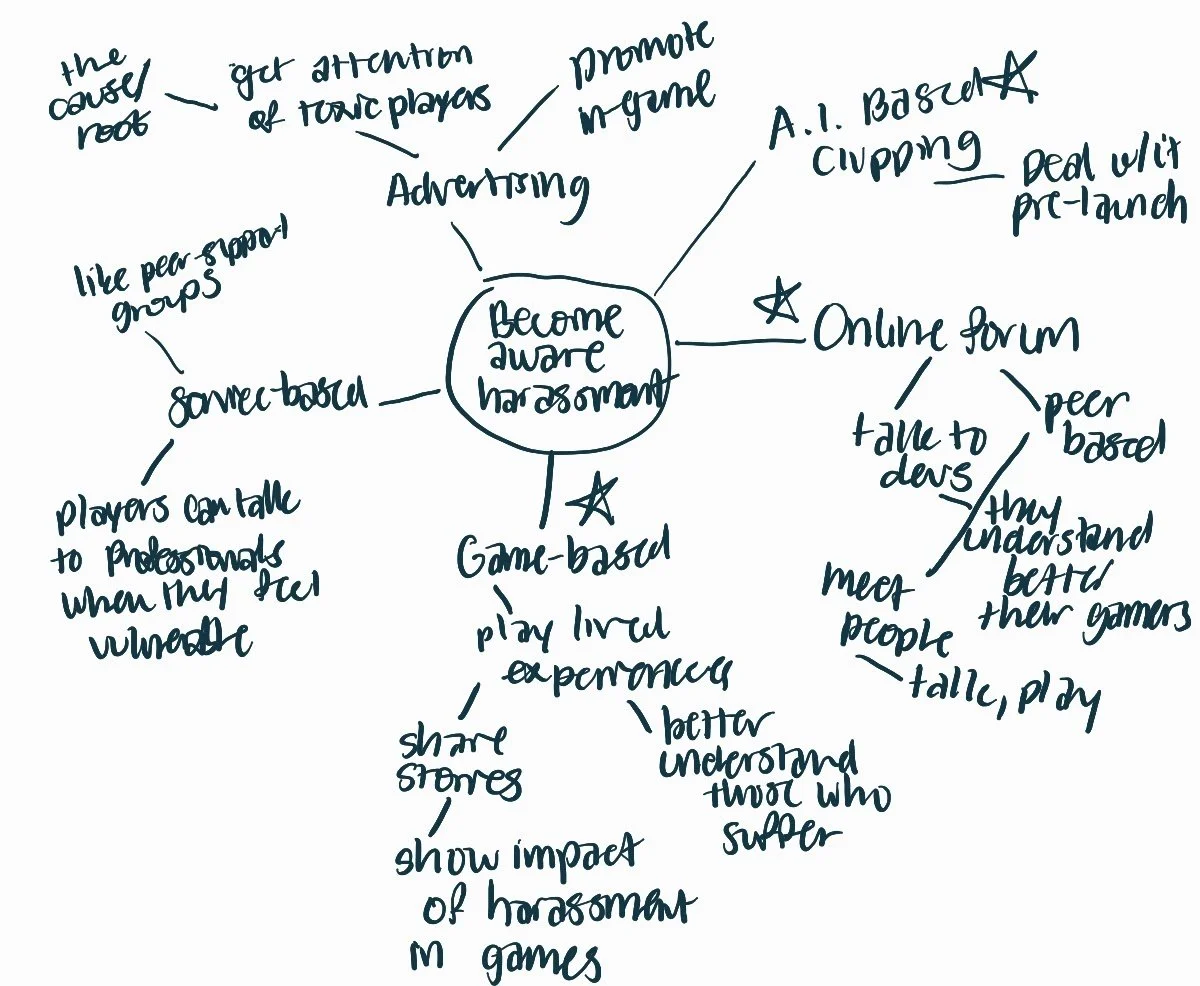

Having done our research on our target audience and their pain points, we focused our attention on the design question. To ideate, we performed mindmapping to discuss possible ideas to address existing harassment, and how to keep that harassment away from possible newcoming players. I drew and expanded upon our ideas.

We came down to three ideas we wanted to further test:

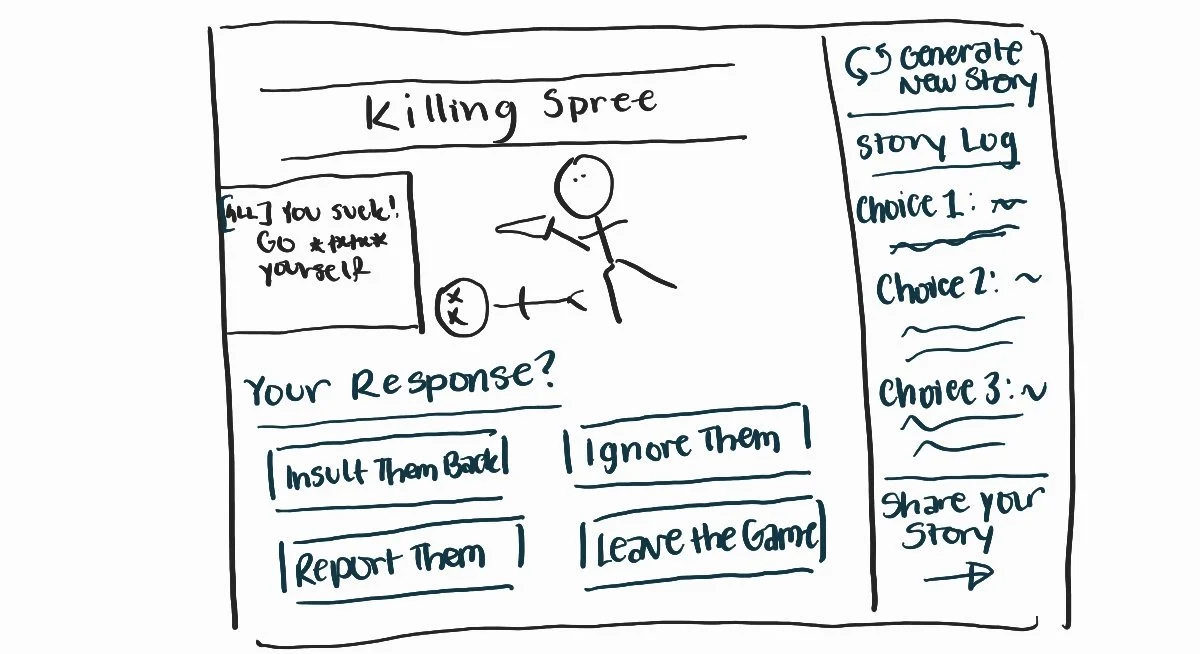

A game-based application where players play through lived experiences of harassment to better understand victims of such behavior

An online forum where players can talk to peers and developers of a game —> direct space of interaction

An A.I. based clipping system that automatically clips harassment and directly supports the player in-game

To test our ideas, I sketched 3 visualizations of the concepts we chose. I also drew a storyboard of the game-based application to depict a possible usage scenario of the application in the current design space.

We had our concepts visualized for a better idea of what they may look like, but it is important that we get feedback from our possible end-users. So, our team performed concept testing with 5 participants, having them do think-alouds as they observed each concept’s visualizations and answered questions regarding them.

After testing our concepts with our end-users, we found general opinions on each idea. The online forum can help developers understand their players, but there is known harassment towards developers and the forum would be another place to moderate. The game-based application is good in that it teaches gamers about the impact of harassment, but there are concerns of relatability and motivation to play. The A.I. clipping is a direct way to deal with instances of harassment, but the way the A.I. detects harassment is important to identify.

Discussing the feedback, we narrowed it down to the game-based application and the A.I. clipping, seeing that online forums are already present in many online spaces (Twitter, Reddit). Between the two, I personally felt like the game-based app would be quite innovative in really diving deeper into the impact of harassment based on true stories. A.I. clipping, on the other hand, was a more obvious direct impact on gaming, being a practical tool for players.

Our team decided to move forward with the A.I. clipping because we felt that it would provide a more immediate and direct influence on current forms of toxicity and harassment, thus better empowering players to promote a healthier community.

Building The Solution: FindIt

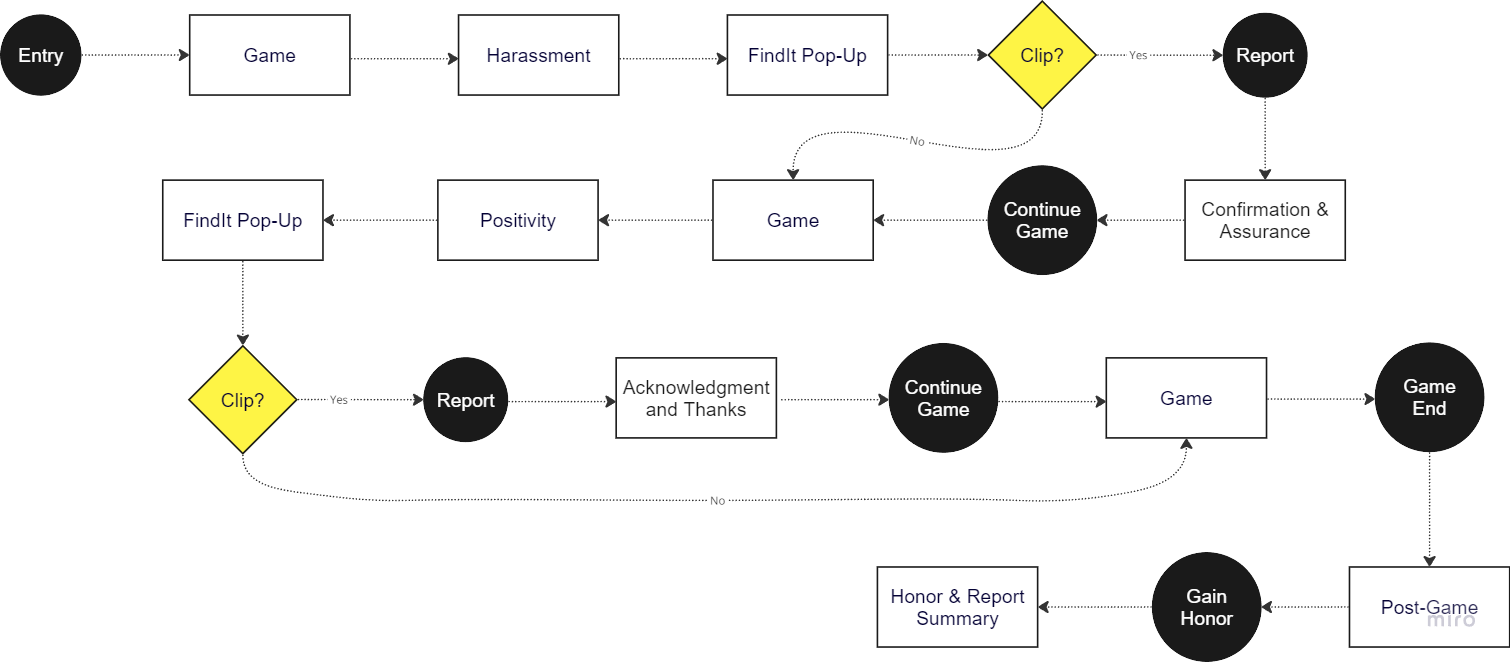

FindIt's prototype has two main components, an AI-based system that clips positive and negative interactions and an honor system that is connected to the AI clipping system. Here's how it works:

When a player is being supported by a teammate they are given an opportunity to clip that interaction and increase the teammate's honor score (a message will also be given to the supportive teammate to ensure they continue this behavior).

When a player is being harassed by a teammate or enemy they are given an opportunity to clip that interaction and decrease the harassing player's honor score (a message will also be given to the harassing player to ensure they do not continue this behavior).

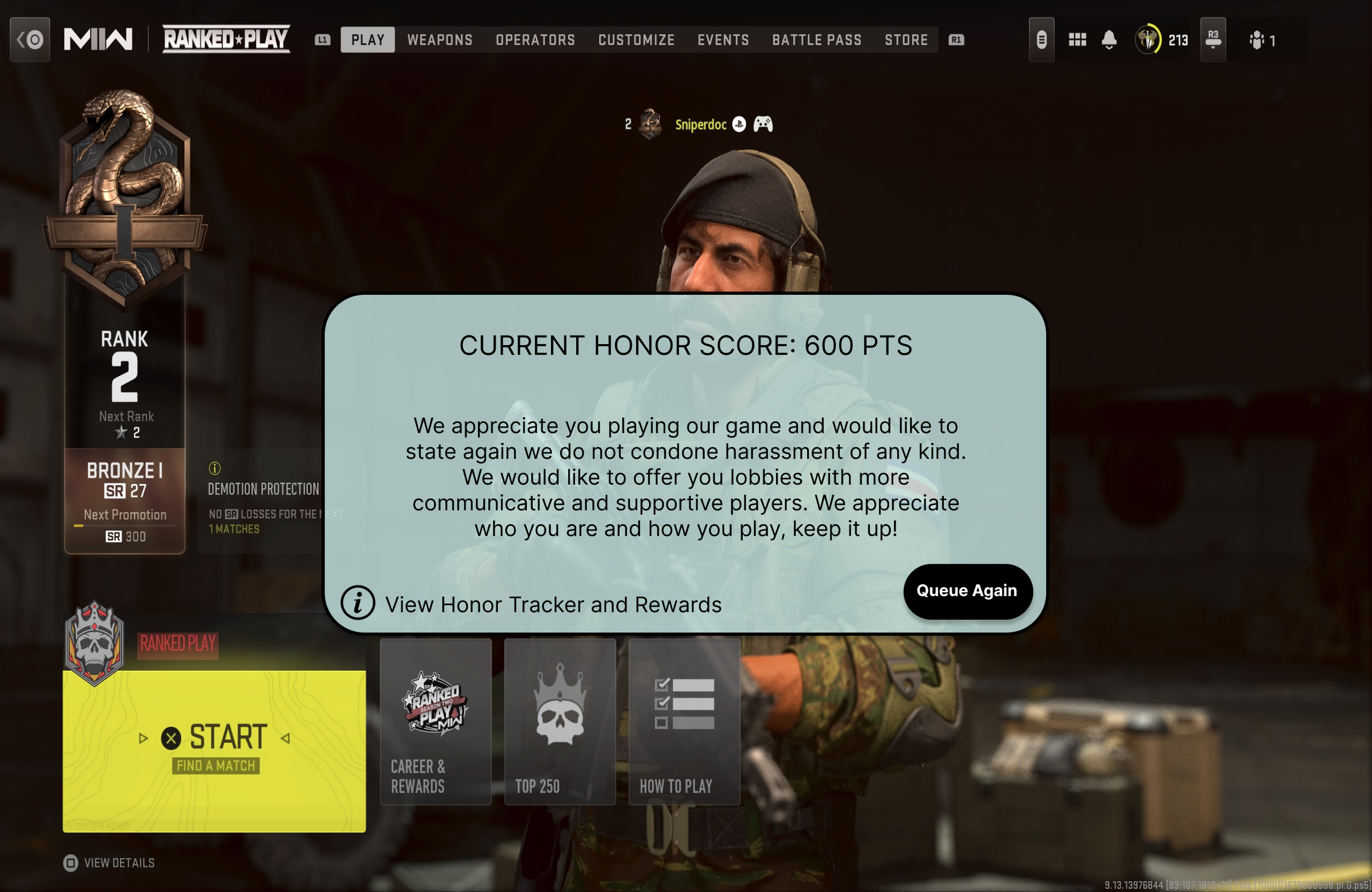

After the game ends, a final message will be displayed to the player, the harassive player, and the supportive player. The message will display their honor score and remind them of the terms of service, depending on the type of player.

The honor score is then used as an incentive again as players with a high honor score will rewarded with in-game cosmetics

The A.I. clipping system of FindIt is a highly valuable support tool for players as they play through a game session. Currently, existing report systems exist where for example, in League of Legends, players are able to report players for toxicity after a game ends. However, by that time, those players have already been afflicted with harassment and their feelings have been harmed. That's where FindIt comes in. By being a direct, easily accessible clipping tool within the game, players don't need to worry about holding in those feelings of harassment when it happens; rather, the game will immediately come to the aid of the player and make them feel supported, promoting healthier player interactions.

For the honor system we paired with A.I. clipping, a basic rundown of the function is to ensure that players play with who they want to play with. Currently, players, if not playing with friends, will run into random players that could very likely harass them. However, the honor system provides a score system where players will be ranked according to their honor in game, and they will be matched with similar ranking players so as to pair positive players with more like-minded people. This promotes healthy community building in the competitive gaming scene for those who wish to be kind, and weeds out the negativity.

Before further building out visual artifacts such as wireframes and prototypes, our team wanted to think about the interactions that a user would have with an A.I. based clipping system:

What is the primary interaction?

What paths can the user take to reach their goals?

What do these paths look like?

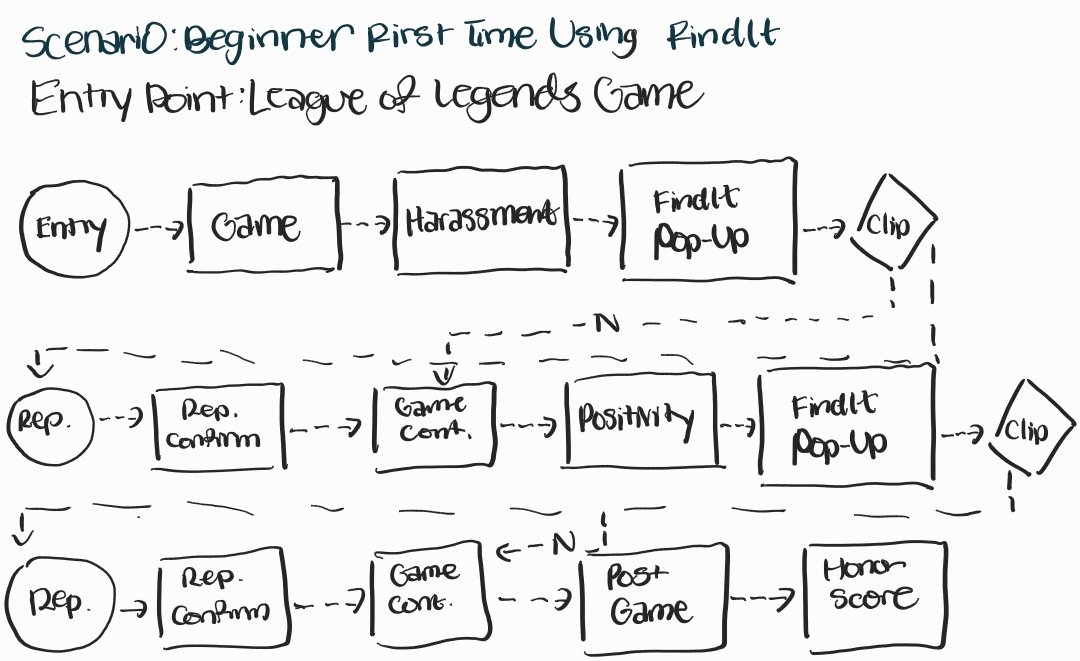

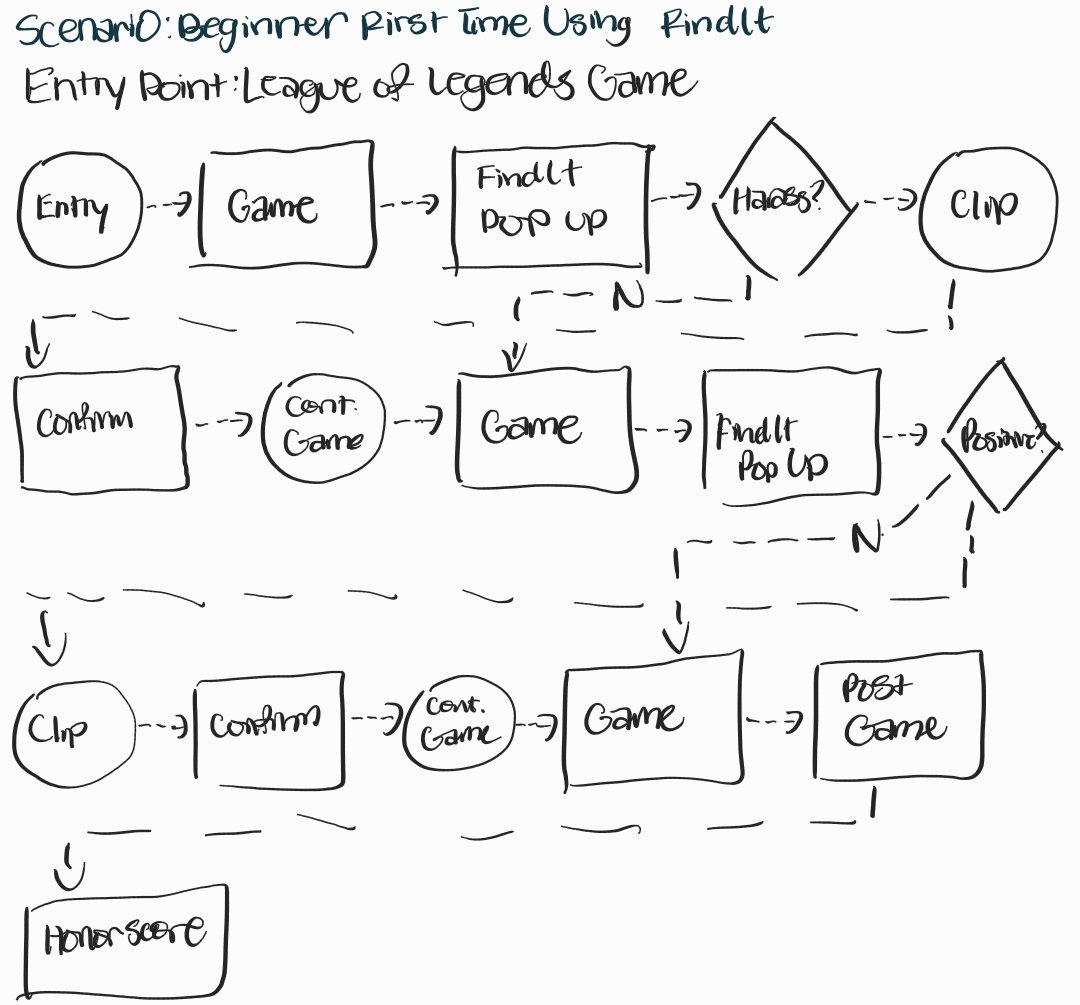

So, I sketched out three total iterations of a possible interaction flow using interaction flow diagramming.

Iteration 1

Iteration 2

Final Iteration

Testing Our Solution

For our first iteration of our FindIt prototype, we created a simple user interface design using the competitive game Call of Duty. We chose to select a sample few screens to mimic the interaction flow that we created: from entering a game, encountering harassment and positivity, and the post-game honor score breakdown. We created a simple overlay with sample encounters with FindIt when it identifies clippable instances, as well as the post-game honor screen.

With this prototype, we performed prototype testing with 10 participants, asking our participants to perform think-alouds as they went through our prototype. Our main goals through testing were to understand how FindIt impacts the current gaming situation, how FindIt can improve, and whether players would use it.

While the tool should provide a better gaming experience, it should avoid interruption of gameplay, and have valid reason to be used beyond a reporting tool.

With that in mind, we created our final mid-fidelity prototype.

Final Solution: FindIt

After receiving feedback from our prototype testing, we focused primarily on making FindIt less interruptive during gameplay, better use of words for support, and providing incentive to use FindIt.

Created less intrusive pop-ups when FindIt identifies harassment or positivity

Users are given easy options to accept/decline the clipping (i.e. controller, L3, R3)

More concise and clear-cut wording to provide intent to support players

Battle-pass type system for honor score, players rewarded in-game cosmetics for promoting positive and healthy community

Key Questions, Next Steps

Moving forward, we need to understand FindIt's viability in different competitive team-based games, the effectiveness of our current terminology, and how longer queue times affect less populated games.

We will continue to iterate our design through prototype testing and stakeholder interviews. This will allow us to create a product that helps create a more accepting, cooperative, and competitive gaming environment. Implementing these changes will make competitive gaming a more welcoming space and help people find a creative safe space that allows them to destress and have fun.

Our key questions moving forward for FindIt will be:

How does FindIT's UI and general layout affect its viability in different competitive team-based games?

How effective is our current terminology in ensuring that players understand our message and are still motivated to continue playing?

How can we further motivate players to promote peaceful gaming beyond the honor system, such as by consistently using the A.I. clipping for positivity?